We are happy to announce Adapters, the new library at the heart of the AdapterHub framework.

Adapters stands in direct tradition to our previous work with the adapter-transformers library while simultaneously revamping the implementation from the ground up and smoothing many rough edges of the previous library.

This blog post summarizes the most important aspects of Adapters, as described in detail in our paper (to be presented as a system demo at EMNLP 2023).

In the summer of 2020, when we released the first version of AdapterHub, along with the adapter-transformers library, adapters and parameter-efficient fine-tuning1 were still a niche research topic.

Adapters were first introduced to Transformer models a few months earlier (Houlsby et al., 2019) and AdapterHub was the very first framework to provide comprehensive tools for working with adapters, dramatically lowering the barrier of training own adapters or leveraging pre-trained ones.

In the now more than three years following, AdapterHub has increasingly gained traction within the NLP community, being liked by thousands and used by hundreds for their research. However, the field of parameter-efficient fine-tuning has grown even faster. Nowadays, with recent LLMs growing ever larger in size, adapter methods, which do not fine-tune the full model, but instead only update a small number of parameters, have become increasingly mainstream. Multiple libraries, dozens of architectures and scores of applications compose a flourishing subfield of LLM research.

Besides parameter-efficiency, modularity is a second important characteristic of adapters (Pfeiffer et al., 2023). Sadly, this is overlooked by many existing tools. From the beginning on, AdapterHub paid special attention to adapter modularity and composition, integrating setups like MAD-X (Pfeiffer et al., 2020). Adapters continues and expands this focus on modularity.

The Library

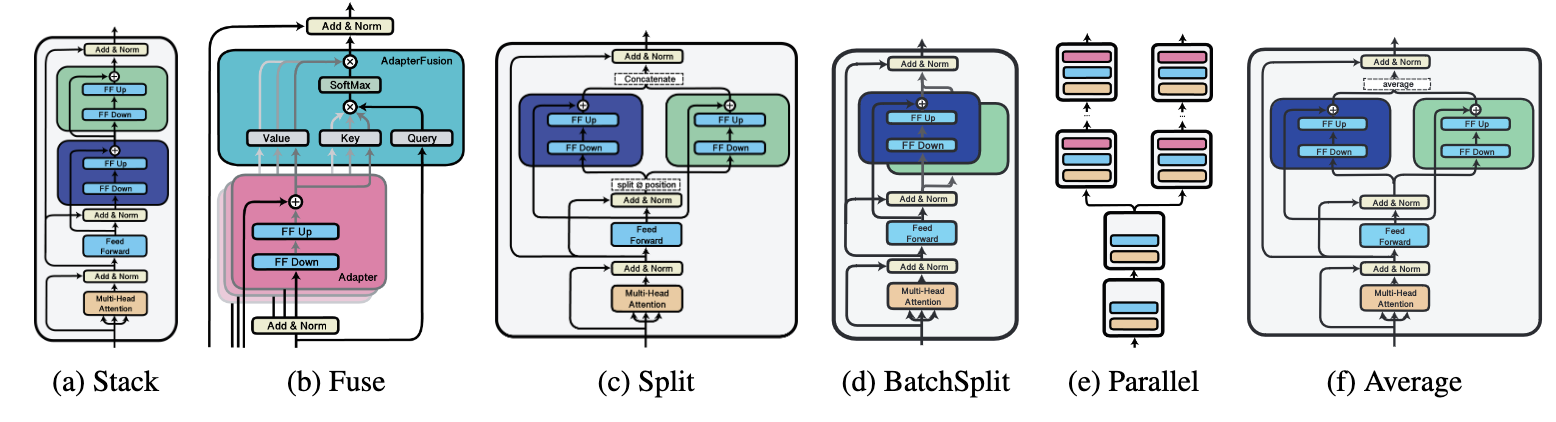

Adapters is a self-contained library supporting a diverse set of adapter methods, integrating them into many common Transformer architectures and allowing flexible and complex adapter configuration. Modular transfer learning can be achieved by combining adapters via six different composition blocks.

All in all, Adapters offers substantial improvements compared to the initial adapter-transformers library:

- Decoupled from the HuggingFace

transformerslibrary - Support of 10 adapter methods

- Support of 6 composition blocks

- Support of 20 diverse models

Adapters can be easily installed via pip:

pip install adapters

The source code of Adapters can be found on GitHub.

In the following, we highlight important components of Adapters.

If you have used adapter-transformers before, much of this will look familiar.

In this case, you might directly want to jump to our transitioning guide, which highlights relevant differences between Adapters and adapter-transformers.

The additions and changes compared to the latest version of adapter-transformers can also be found in our release notes.

Transformers Integration

Adapters acts as an add-on to HuggingFace's Transformers library. As a result, existing Transformers models can be easily attached with adapter functionality as follows:

import adapters

from transformers import AutoModelForSequenceClassification

model = AutoModelForSequenceClassification.from_pretrained("t5-base")

adapters.init(model) # Adding adapter-specific functionality

model.add_adapter("adapter0")

However, we recommend using the model classes provided by Adapters, such as XXXAdapterModel, where "XXX" denotes the model architecture, e.g., Bert.

These models provide the adapter functionality without further initialization and support multiple heads.

The latter is especially relevant when using composition blocks which can handle multiple outputs, for instance, the BatchSplit composition block. Here's an example of how to use such an XXXAdapterModel class:

from adapters import AutoAdapterModel

model = AutoAdapterModel.from_pretrained("roberta-base")

model.add_adapter("adapter1", config="seq_bn") # add the new adapter to the model

model.add_classification_head("adapter1", num_classes=3) # add a sequence classification head

model.train_adapter("adapter1") # freeze the model weights and activate the adapter

Adapter Methods

Each adapter method is defined by a configuration object or string, allowing for flexible customization of various adapter module properties, including placement, capacity, residual connections, initialization, etc. We distinguish between single methods consisting of one type of adapter module and complex methods consisting of multiple different adapter module types.

Single Methods

Adapters supports single adapter methods that introduce parameters in new feed-forward modules such as bottleneck adapters (Houlsby et al., 2019), introduce prompts at different locations such as prefix tuning (Li and Liang, 2021), reparameterize existing modules such as LoRA (Hu et al., 2022) or re-scale their output representations such as (IA)³ (Liu et al., 2022). For more information, see our documentation.

All adapter methods can be added to a model by the unified add_adapter() method, e.g.:

model.add_adapter("adapter2", config="seq_bn")

Alternatively, a config class, along with custom parameters:

from adapters import PrefixTuningConfig

model.add_adapter("adapter3", config=PrefixTuningConfig(prefix_length=20))

The following table gives an overview of many currently supported single methods, along with their configuration class and configuration string2:

| Identifier | Configuration class | More information |

|---|---|---|

[double_]seq_bn |

[Double]SeqBnConfig() |

Bottleneck Adapters |

par_bn |

ParBnConfig() |

Bottleneck Adapters |

[double_]seq_bn_inv |

[DoubleSeq]BnInvConfig() |

Invertible Adapters |

compacter[++] |

Compacter[PlusPlus]Config() |

Compacter |

prefix_tuning |

PrefixTuningConfig() |

Prefix Tuning |

lora |

LoRAConfig() |

LoRA |

ia3 |

IA3Config() |

IA³ |

mam |

MAMConfig() |

Mix-and-Match Adapters |

unipelt |

UniPELTConfig() |

UniPELT |

prompt_tuning |

PromptTuningConfig() |

Prompt Tuning |

For more details on all adapter methods, visit our documentation.

Complex Methods

While different efficient fine-tuning methods and configurations have often been proposed as standalone, combining them for joint training has proven to be beneficial (He et al., 2022; Mao et al., 2022). To make this process easier, Adapters provides the possibility to group multiple configuration instances using the ConfigUnion class. This flexible mechanism allows easy integration of multiple complex methods proposed in the literature (as the two examples outlined below), as well as the construction of other, new complex configurations currently not available nor benchmarked in the literature (Zhou et al., 2023).

Example: Mix-and-Match Adapters (He et al., 2022) were proposed as a combination of Prefix-Tuning and parallel bottleneck adapters. Using ConfigUnion, this method can be defined as:

from adapters import ConfigUnion, PrefixTuningConfig, ParBnConfig, AutoAdapterModel

model = AutoAdapterModel.from_pretrained("microsoft/deberta-v3-base")

adapter_config = ConfigUnion(

PrefixTuningConfig(prefix_length=20),

ParBnConfig(reduction_factor=4),

)

model.add_adapter("my_adapter", config=adapter_config, set_active=True)

Learn more about complex adapter configurations using ConfigUnion in our documentation.

Modularity and Composition Blocks

While the modularity and composability aspect of adapters have seen increasing interest in research, existing open-source libraries (Mangrulkar et al., 2022; Hu et al., 2023a) have largely overlooked these aspects. Adapters makes adapter compositions a central and accessible part of working with adapters by enabling the definition of complex, composed adapter setups. We define a set of simple composition blocks that each capture a specific method of aggregating the functionality of multiple adapters. Each composition block class takes a sequence of adapter identifiers plus optional configuration as arguments. The defined adapter setup is then parsed at runtime by Adapters to allow for dynamic switching between adapters per forward pass. Above, the different composition blocks are illustrated.

An example composition could look as follows:

import adapters.composition as ac

# ...

config = "mam" # mix-and-match adapters

model.add_adapter("a", config=config)

model.add_adapter("b", config=config)

model.add_adapter("c", config=config)

model.set_active_adapters(ac.Stack("a", ac.Parallel("b", "c")))

print(model.active_adapters) # The active config is: Stack[a, Parallel[b, c]]

With this setup activated, inputs in each layer would first flow through adapter "a" before being forwarded though "b" and "c" in parallel.

To learn more, check out this blog post and our documentation.

Evaluating Adapter Performance

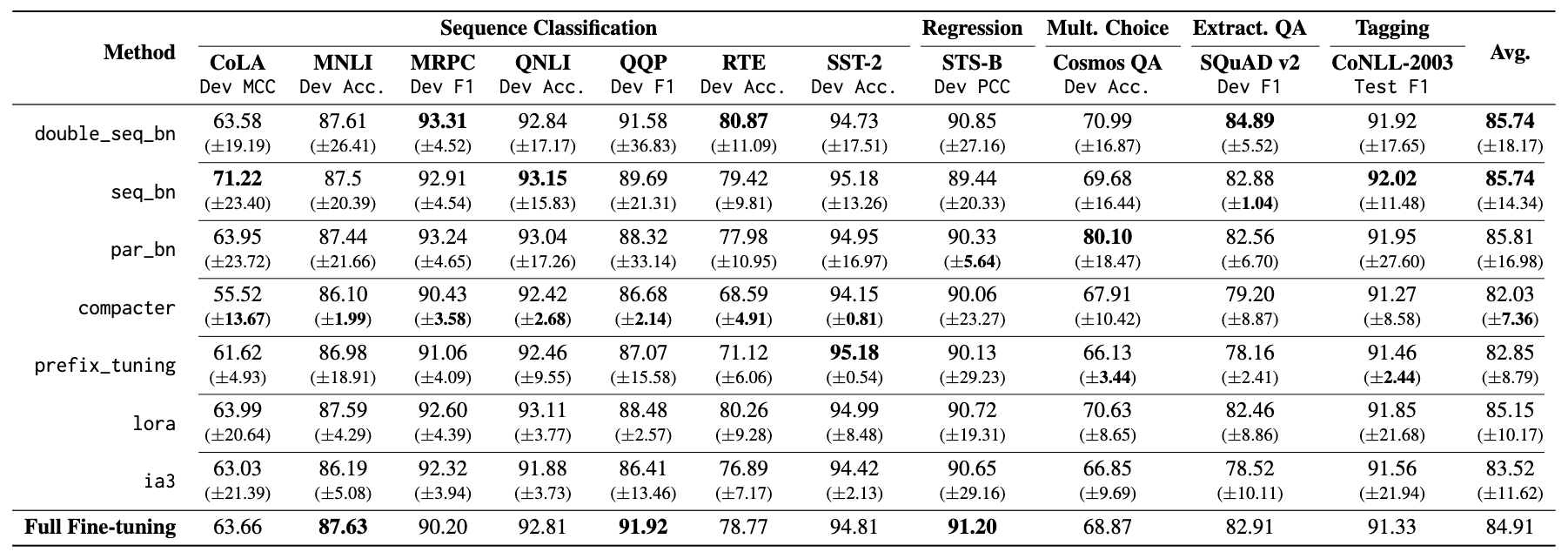

In addition to the aforementioned ease of use, we show that the adapter methods offered by our library are performant across a range of settings. To this end, we conduct evaluations on the single adapter implementations made available by Adapters.

Results are shown in Figure 2. The obvious takeaway from our evaluations is that all adapter implementations offered by our framework are competitive with full model fine-tuning, across all task classes. Approaches that offer more tunable hyper-parameters (and thus allow for easy scaling), such as Bottleneck adapters, LoRA, and Prefix Tuning predictably have the highest topline performance, often surpassing full fine-tuning. However, extremely parameter-frugal methods like (IA)3, which add < 0.005% of the parameters of the base model, also perform commendably and only fall short by a small fraction. Finally, the Compacter is the least volatile among the single methods, obtaining the lowest standard deviation between runs on the majority of tasks.

Conclusion

The field of adapter/ PEFT methods will continue to advance rapidly and gain importance.

Already today, various interesting and promising approaches are not yet covered by AdapterHub and the Adapters library.

However, Adapters aims to provide a new solid foundation for research and application of adapters, upon which new and extended methods can be successively added in the future.

Adapters has a clear focus on parameter-efficiency and modularity of adapters and builds on the rich and successful history of AdapterHub and adapter-transformers.

In the end, integrating the latest great research into the library is a community effort, and we invite you to contribute in one of many possible ways.

References

- He, J., Zhou, C., Ma, X., Berg-Kirkpatrick, T., & Neubig, G. (2021, October). Towards a Unified View of Parameter-Efficient Transfer Learning. In International Conference on Learning Representations.

- Houlsby, N., Giurgiu, A., Jastrzebski, S., Morrone, B., Laroussilhe, Q.D., Gesmundo, A., Attariyan, M., & Gelly, S. (2019). Parameter-Efficient Transfer Learning for NLP. ICML.

- Hu, E. J., Wallis, P., Allen-Zhu, Z., Li, Y., Wang, S., Wang, L., & Chen, W. (2021, October). LoRA: Low-Rank Adaptation of Large Language Models. In International Conference on Learning Representations.

- Li, X. L., & Liang, P. (2021, August). Prefix-Tuning: Optimizing Continuous Prompts for Generation. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers) (pp. 4582-4597).

- Liu, H., Tam, D., Muqeeth, M., Mohta, J., Huang, T., Bansal, M., & Raffel, C. A. (2022). Few-shot parameter-efficient fine-tuning is better and cheaper than in-context learning. Advances in Neural Information Processing Systems, 35, 1950-1965.

- Mao, Y., Mathias, L., Hou, R., Almahairi, A., Ma, H., Han, J., ... & Khabsa, M. (2022, May). UniPELT: A Unified Framework for Parameter-Efficient Language Model Tuning. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) (pp. 6253-6264).

- Pfeiffer, J., Vulic, I., Gurevych, I., & Ruder, S. (2020). MAD-X: An Adapter-based Framework for Multi-task Cross-lingual Transfer. ArXiv, abs/2005.00052.

- Pfeiffer, J., Ruder, S., Vulic, I., & Ponti, E. (2023). Modular Deep Learning. ArXiv, abs/2302.11529.

- Zhou, H., Wan, X., Vulić, I., & Korhonen, A. (2023). AutoPEFT: Automatic Configuration Search for Parameter-Efficient Fine-Tuning. arXiv preprint arXiv:2301.12132.

Citation

@inproceedings{poth-etal-2023-adapters,

title = "Adapters: A Unified Library for Parameter-Efficient and Modular Transfer Learning",

author = {Poth, Clifton and

Sterz, Hannah and

Paul, Indraneil and

Purkayastha, Sukannya and

Engl{\"a}nder, Leon and

Imhof, Timo and

Vuli{\'c}, Ivan and

Ruder, Sebastian and

Gurevych, Iryna and

Pfeiffer, Jonas},

booktitle = "Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing: System Demonstrations",

month = dec,

year = "2023",

address = "Singapore",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.emnlp-demo.13",

pages = "149--160",

}