Throughout the last months, we worked on improving the adapter-transformers library and including new features. This includes support for new models like CLIP and BEiT, more flexible adapter configuration, and adapter composition for prefix-tuning. In the following, we describe the new features and updates in more detail.

You can find version 3.2 of adapter-transformers on GitHub or install it via pip:

pip install -U adapter-transformers

Support for adapter configuration strings

For running experiments at a large scale with varying hyperparameters, it can be annoying to set the correct hyperparameters whenever running the scripts. Now, you can configure the adapter with a string. In previous versions, it was possible to use one of the predefined configurations via a string e.g. pfeiffer. From v.3.2 on it is possible to adapt parameters within the string as well.

To create a Pfeiffer adapter with reduction factor 16 you can now use pfeiffer[reduction_factor=16]. This can also help run the example scripts. Learn more

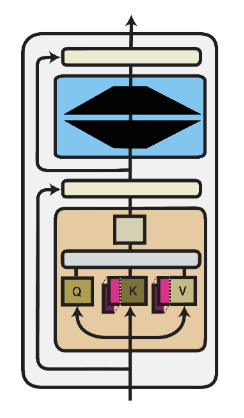

Adapter Composition for Prefix Tuning

Parameter-effifient fine-tuning methods have proven to be modular. Combining multiple adapters can be beneficial for transfer learning across languages. In v.3.2 we add Stack, Parallel & BatchSplit compositions to prefix tuning.

In previous adapter-transformers versions, you could combine multiple bottleneck adapters. You could use them in parallel or stack them. Now, this is also possible for prefix tuning adapters. Add multiple prefixes to the same model to combine the functionality of multiple adapters (Stack) or perform several tasks simultaneously (Parallel, BatchSplit). Learn more

Enable parallel sequence generation with adapters

In v3.2 you can use the Parallel block in combination with the model.generate() method. This allows to generate text for multiple adapters simultaneously. As a result, generation can now be used in a multi task inference setup and generate text for multiple tasks within one forward pass.

New model integrations

The new v3.2 of adapter-transformers adds support for adapters for several new models:

- BEiT

- GPT-J

- CLIP

- ALBERT

- BertGeneration

Other notable changes

⚠️ Breaking change: The latest release removes the MultiLingAdapterArguments class which was previously used to add adapter support to training scripts.

It is now recommended to use the AdapterArguments class and setup_adapter_training method instead. Learn more.

Finally, version 3.2 of adapter-transformers updates the underlying transformers version from v.4.23.1 to v4.26.1

Fixes

- Fixes for GLUE & dependency parsing example script

- Fix access to shared parameters of compacter (e.g. during sequence generation)

- Fix reference to adapter configs in

T5EncoderModel - Fix DeBERTa prefix tuning with enabled relative attention

- Fix gating for prefix tuning layers

- Fix input to T5 adapter layers

- Fix AdapterTrainer hyperparameter tuning

- Move loading best adapter to AdapterTrainer class

- Make HuggingFace Hub Mixin work with newer utilities

- Only compute fusion reg loss if the fusion layer is trained

References

- Pfeiffer, J., Ruder, S., Vulic, I., & Ponti, E. (2023). Modular Deep Learning. ArXiv, abs/2302.11529.